| 1. | ||

| 2. | ||

| 3. | ||

| 4. | ||

| 4.1. | ||

| 4.2. | ||

| 5. | ||

Addressing Human Factor in Civil Engineering Using a Holistic Fuzzy Approach

Isabel Ferraris1, M. Daniel de la Canal1, Omar Fernández Pellon2

1Facultad de Ingeniería, Universidad Nacional del Comahue, Neuquén, Argentina

2Facultad de Economía, Universidad Nacional del Comahue, Neuquén, Argentina

Email address

(I. Ferraris)

(I. Ferraris) Citation

Isabel Ferraris, M. Daniel de la Canal, Omar Fernández Pellon. Addressing Human Factor in Civil Engineering Using a Holistic Fuzzy Approach. International Journal of Civil Engineering and Construction Science. Vol. 3, No. 1, 2016, pp. 1-7.

Abstract

Engineering systems comprise different kind of components. In one hand typical technological elements well known in the profession and in the other, people involved in all activities from design to decommission. Sometimes the first are thought of as embedded in the second, the so called Human Factor (HF), on which they depend. In addition, available statistics information shows that most engineering failures are caused by HF. Based on this it seems to be restrictive to talk of failures in engineering systems purely in technical terms. Technical and not technical elements differ from each other in nature and thus have different type of associated uncertainties. In modeling the first ones, the stochastic, probabilistic techniques have demonstrated to be powerful and valid tools. Nevertheless HF characteristics which are epistemic need an alternative approach to be included as they are qualified rather than quantified. In fact the idea is to keep HF under acceptable levels replacing the concept of solution by control. A holistic approach based on fuzzy logic is presented in this paper to model control on HF. Fuzzy logic allows including not only subjective and objective data but imprecise information as propositions under this perspective may have different degrees of truth. In this way decision makers can count with more solid basis to guide their future actions.

Keywords

Human Factor, Engineering, Control, Holistic Approach, Fuzzy Cognitive Maps

1. Introduction

Engineering systems comprise different kind of components. In one hand typical technological elements well known in the profession and in the other, people involved in all activities from design to decommission. This statement affirms what engineersactually know and in general terms agree. All along the past decades a great amount of effort has been invested in developing formal methods trying to draw near the "very" reality engineering practice has to face. Though engineering does not pursue the truth, in fact tries to draw near it so as to warranty good performance and safety and economic viability. Here comes the question how near is near enough for a model to be soundly accurate? Historically the emphasis was placed mainly in technical matters. More and more information became available, knowledge has been extended, increasing refined models and theories have been proposed and tested, and yet failures still occur. It seems that engineers are constantly challenged in addressing their own problems and revising their solutions. It appears that comprehension is not enough or adequate and relevant things are left aside. Among the later the influence of people behavior is a typical example. Sometimes in an attempt to take human factor into account, a number of assumptions are formulated in order to validate outputs. Some codes e.g. Eurocodes [1] with QA measures in built security, use this perspective. On the other hand, some researchers argue HF must be included in calculus algorithms as in [2]. Whatever the approach and keeping in mind that human errors never will be completely eliminated; they can instead be taken into account, controlled and managed.

In predicting the performance of an engineering facility a number of uncertainties with diverse source must be taken into account as decisions have to be taken. In this context human factor is central. Technical, hard systems are embedded in human, soft systems. It is argued that a better understanding of human behavior will contribute to enhance models purely based on technological elements. In the other hand the analysis of engineering problems goes from gathering information and evidence, modeling them as better as possible to get to conclusions through a process which must account the relevant factors keeping in mind their inherent imprecision. Uncertainty, the type it were, must be addressed and in this way risk be mitigated through its proper characterization. The presence of improperly characterized uncertainty can lead to a greater likelihood of an adverse event occurring as well as increased estimated cost margins as a means of compensating for that risk [3]. Diverse criterion can be followed to classify uncertainties as specialized literature shows but, in fact, little conceptual differences can be found. Those related to human factor, however, can be seen as epistemic [4] or can also be thought of as a special and separate type [5]. In any way they were conceived, their inherent characteristics make Probability theory not a suitable tool to model them. We propose to look beyond the purely probabilistic perspective of engineering systems accounting for human factor uncertainty. A holistic conception that goes further than an aggregation of parts is proposed to address the whole problem.

In the following paragraphs HF main characteristics will be presented which inevitably lead to address civil engineering problems taking into account their associated uncertainties. A brief discussion about them will show that HF, left sometimes aside, needs to be accounted for. Better and more grounded decisions could in this way be taken.

2. Human Factor

HF is an organized and high complex system that intervenes in the process of design, execution and maintenance of an engineering device, where context factors impact strongly.

HF can be thought of as the system where engineering is embedded and processes are performed and developed. When HF is addressed, problems become more complex and therefore analysts have to incorporate high complex operations to account for them and at the same time new uncertainties are introduced.

There is consensus within engineering community that HF plays a major role in failure mechanisms; all these based on statistics and research. Extracted from [6]:

• Human rather than technical failures now represent the greatest threat to complex and potentially hazardous systems.

• Managing the human risks will never be100% effective. Human fallibility can be moderated, but it cannot be eliminated.

• Different error types have different underlying mechanisms, occur in different parts of the organization, and require different methods of risk management. The basic distinctions are between:

1. Slips, lapses, trips, and fumbles (execution failures) and mistakes (planning or problem solving failures). Mistakes are divided into rule based mistakes and knowledge based mistakes

2. Errors (information-handling problems)and violations (motivational problems)

3. Active versus latent failures. Activefailures are committed by those in direct contact with the patient, latent failures arise in organizational and managerial spheres and their adverse effects may take a long time to become evident.

• HF problems are a product of a chain of causes in which the individual psychological factors (that is, moment—are, inattention, forgetting, etc) are the last and least manageable links.

• People do not act in isolation. Their behavior is shaped by circumstances. The same is true for errors and violations. The likelihood of an unsafe act being committed is heavily influenced by the nature of the task and by the local work place conditions.

• Safety significant errors occur at all levels of the system, not just at the sharp end. Decisions made in the upper echelons of the organization create the conditions in the workplace that subsequently promote individual errors and violations. Latent failures are present long before an accident and are hence prime candidates for principled risk management.

These statements are set up as starting points in an attempt to address HF in engineering problems.

As was commented, "zero human errors" is conceptually not possible. HF is complex and unpredictable with not defined behavioral patterns. People usually work in isolation but belong to a team and the team belongs to an organization. A context is around all these, like a kind of nested array. Human actions are difficult to estimate. So, what sort of formal approach can be chosen to model HF, as those based on bivalent, crisp mathematics are definitely not suitable? [7].

Hard engineering problems have solutions. However in problems involving HF the concept of solution must be replaced by control.

Hence, to mitigate, control or keep within certain margins HF values, it is necessary to quantify their state. In order to describe and evaluate them we can define different linguistic variables, which are in fact strongly interrelated, linked in a net arrangement. The process of HF evaluation may be capable of:

• Detect the natural variability of human behavior. During the process information is needed.

• Represent the interactions between the principal variables. Those, based on evidence that better describe HF should be chosen.

• Account for the lower limit of acceptability of human behavior in a specific activity.

• Link the obtained values with an action plan (decision making process) which in case could modify unaccepted levels of HF

At a first stage, and going to the essence of what to measure is, the research group tried to associate this task to what conventionally is accepted as a scientific measurement. [8] [9] [10]. Nevertheless we argue that the methodology pursued should have the accepted typical technical components but at the same time has to go beyond the physical concept that defines a magnitude. That is to guide this search through the questions about what and how to measure and how outcomes will be interpreted [7].

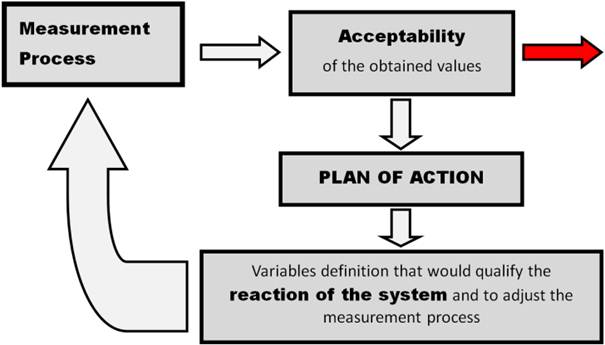

In a more detailed level, measuring HF means to look for proper parameters which represent and describe relevant aspects and performance against failure scenarios. They have to be measured with some precision as well. The measurement has to be partial in order to be able to modify the current state if necessary. This leads to intervene on the organization. In this way the reaction capacity of the system to the actions implemented the system to keep on track, can be detected by a subsequent measurement. The intention is to maintain the chosen parameters around certain values previously agreed as acceptable. Fig. 1 sketches this process which was outlined in [10].

Figure 1. Process of HF Evaluation and Control.

Monitor and control is a continuous task as can be seen. However and going further carrying out this course of action, limit values must not be reached up as they guarantee a good performance. These maximum values may be established through a process of acceptability. In case those numbers were reached, unacceptable functioning is assumed and the process is interrupted. If that is the case, initial or border conditions of the problem have to be revised and eventually changed. Red arrow in Fig. 1 represents this situation. It can be interpreted that the system must be re-configured. And when that is already done, a practical dictatum to follow might be "what looks acceptable today may not look so tomorrow" [11], so monitoring should go on.

Fallibility is a characteristic inherent to human beings associated to the risk or possibility of make errors.

Human errors occur during development of a system or project due to blunders or mistakes by an individual or individuals. In general, human errors are difficult to estimate. However, measures such as education, a good work environment, a reduction in task complexity, and improved personnel selection as well as control measures such as self-checking, external checking, inspections, and legal sanctions have proved successful in reducing human errors.

If the human errors are inevitable and ubiquitous, defenses will have to be generated to manage and acquire competence to face them. This is a part of the target of this paper.

The objective is to develop abilities, within the working groups, to deal with adverse scenarios as well as interpersonal problems. This not only work in decreasing human errors but also, facing the occurrence of them, diminishing the consequences and increasing the capacity of the human group recovery. This information may be used in future and similar situations. A data base in this way could be created, useful to develop the capacity of reaction of individuals and groups in case they have to face confusing, adverse or unexpected situations. This concept is what in neuroscience is called resilience.

3. Uncertainty

The problems of reality are called "open universe problems" and from the perspective of human knowledge high level and variety of uncertainties are present. HF has strong implications in the universe of uncertainties.

Human knowledge, skills and perceptions of reality are limited. As was said knowledge borders are constantly expanding. Nevertheless the horizon of the truth is far and regularly blurs when we believe we have reached it.

We think about an engineering problem as a process of decision-making in an environment of uncertainty. It is also an economic activity whose aim is to provide a safe and reliable service to the community.

Engineering is concerned with actions that in fact modify the reality, which must always be thought of as using models. Models may be near the reality they try to characterize in appearance and performance, but both will never be the same. Modeling implies that simplifications have to be made. Hypothesis must be formulated to obtain simple, rational and economic idealizations. A number of factors are on purpose left aside of the real problem and a gap arises between reality and model which is a kind of ignorance usually called model uncertainty. Within the universe of what is not known it is only a part of what is not taken into account. In the other hand, the state of the art can be seen as a limit in terms of knowledge as the totality is never known. This ignorance, the so called incompleteness, is a characteristic attributed to the limitation of human thought. As can be noted, we must be aware of it as we cannot model what we do not know.

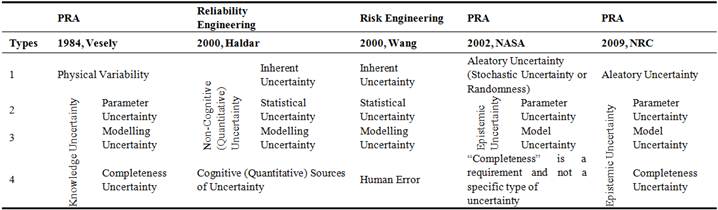

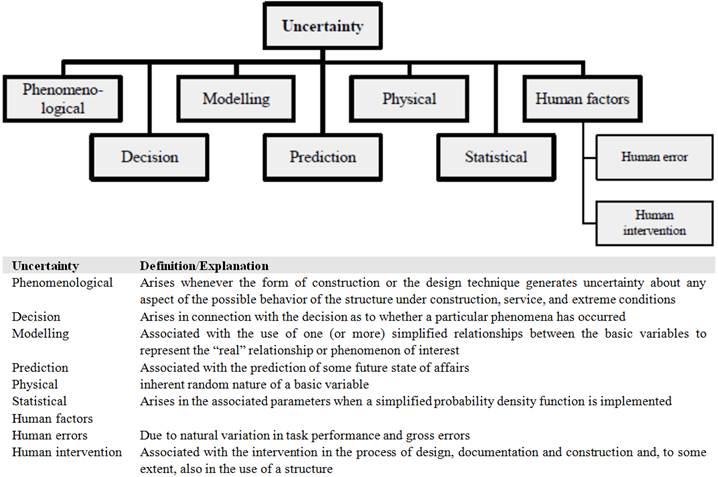

Turning back to HF and their associated uncertainties, there are different engineering classifications. Table 1, from [12] and Fig. 2 from [4], shows that they do not differ significantly.

With the aim of integrate different perspectives to model the uncertainties of engineering problems HF is assumed to be the agent, context and instrument who observes, analyses and intervenes on the reality. An appropriate approach is necessary to account for and represent HF in design, execution and maintenance of engineering projects. The proposal in the following paragraph intends to accomplish this.

Table 1. Summary of Uncertainty Types: Terminology and Comparison from Selected Sources [12].

Figure 2. Uncertainty Classification from [4].

Table 1 resumes classifications made by prestigious institutions associated to risk analysis and evaluation in engineering practice. FH uncertainty is conceived as epistemic in parallel to aleatoric ones. An aleatoric variable is addressed through a highly repeatable experiment [11]. Information about human actions can as well be collected. Nevertheless regularity cannot be inferred from it. The value of variables associated to human matters is highly context dependent. That is the reason why when information about a certain even it gathered, it cannot be extended to another event that seems to be analogous to the first. Statistic regularity cannot soundly represent human behavior.

Strictly speaking, to evaluate and incorporate into the algorithms the different types of uncertainties, different mathematical procedures are required. However in many situations, most of the uncertainties, no matter their type is, are compulsory included in the formal algorithms based on classical bivalent mathematics, through Probability Theory [5].

Engineering solutions are accepted in general, if they work in economic and safe way regardless of the values of truth on which they are based.

Variables as well as the calculation models can be described with acceptable accuracy by statistical schemes. To use these tools, sufficient and appropriate information must be available.

Another interesting and pragmatic classification of uncertainty is that raises from [13] who points out that it is useful to think about three types of uncertainty:

• Aleatoric–Randomness arises from fluctuations in time, natural space variations of material properties and inherent uncertainty associated with the measuring device.

• Epistemic - Imperfect knowledge arises from the difference between the predictions of models and the reality the models intend to represent. Any structural analysis will always be an approximation.

• Surprisal covers matters which are unexpected, those things that neither random variability nor limitations of model quality will cover. There are many sources of surprise. Virtually all arise from human factors, (Reason J. 1990) from errors, slips and lapses, but this is not the only cause of failure.

Here, Elms define a group very linked to the quality of the HF that governs the process.

Based on the above evidence, we can think of the HF as a particular type of uncertainty: behavioral uncertainty, which is the uncertainty in how individuals or organizations act. Behavioral uncertainty arises from four sources: design uncertainty, requirement uncertainty, volitional uncertainty, and human errors. Design uncertainty includes variables over which the engineer or designer has direct control but has not yet decided upon [13].

4. Proposal

4.1. Holistic Approach

The holistic fuzzy approach proposed in this paper provides a structured framework to think about the whole problem and the same time to pay attention to the details. It can be thought of as "the law of the process" that shows the network of components with their relationships.

Based on an observational point of view Koestler [14] argues that living organisms as well as social structures are organized in hierarchical schemes. These arrangements can be thought of as ascendant series of levels of increasing complexity. The components show as "parts" or "wholes" within the hierarchy in a way that depends on how they are thought of. A "part" as commonly the word is used, means something fragmentary, which cannot exist for itself. Conversely a "whole" is something complete. Koestler points out that they do not exist in such absolute way. Based on this he proposes the name of "holon" to that component, from the Greek holos (whole) plus on at the end suggesting part or particle as in electron or proton. In that way, the inherent characteristic of a holistic structure is that it is much more than an aggregation of parts. Holons of upper levels have high conceptual content together with low precision while going down to lower levels less conceptual elements with and more precision can be found, which conform a net of strong inter and intra level relationships.

Complex problems where HF is present seem to follow this pattern and will be modeled under this perspective in this paper.

4.2. Cognitive Fuzzy Maps

When we start the task of controlling HF, we faced the problem to find a formal tool which would allows us to:

• To represent and connect soft and hard variables

• To capture complex systems dynamics

• To deal with uncertainty

Based on these requirements Cognitive Fuzzy Maps introduced by Bart Kosko [15] seem to have an interesting potential to represent HF

A CFM is a fuzzy structure which represents a causal reasoning with feedback possibility. Maps are conformed to nodes(fuzzy concepts) which can be activated partially, related through fuzzy causal rules known as causal edges, e.g. linguistic labels if required. Any information or data, hard and soft can be included through them. It can be said that CFM constitute causal images of a system, an approximation of its behavior. They work representing the system dynamics. A well performance will be obtained when the essence of the problem is captured. This task is in experts’ hands "translating" concepts into linguistic labels and edges into degrees of activation.

CFM allows simulating a good or bad system behavior. In this way a tendency can be obtained.

CFM Characteristics

Node: represent a concept or a variable. Qualitative values such as fuzzy subsets can be assigned or quantitative values such as (0,1) interval as well. They are commonly denoted "C", for example:

• ![]()

• ![]()

• ![]()

Edges: represent causal relationships eij between node![]() and node

and node![]() , that is

, that is ![]() .

.

eij denotes the degree of causality node Ci exerts on node Cj.

A positive edge indicates that if Ci increases, Cj increases, while a negative edge produces an inverse relationship. A null edge means there is no relationship between those nodes.

Causal relationship matrix and performance

The causal map can be represented through a weighing matrix or matrix of causal relationships

• ![]()

• ![]()

• ![]()

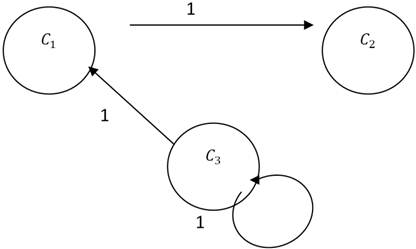

Figure 3. Cognitive Fuzzy Map (CFM).

The CFM shown in Fig. 3, is simple as concepts and causal relationships are within the {-1, 0,1} set. It is just a way of modeling the real system and is presented as an example to show its performance. A CFM design is an interdisciplinary task which in many times is made by experts.

The matrix of causal relationships could be the following:

This can be read as follows:

Where a zero is found there is no causal relationship between concepts, whereas a 1 means a positive causal relationship. It is then that an increase in C1 makes an increase in C2 while an increase en C3 makes an increase in C1. So C3 makes an increase in C3.

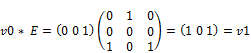

An initial vector ![]() is proposed in order to observe how the system works. In this example C3 is activated in terms of the 1 (improvement competences are implemented in the system) and the others remain zero. Vector vo and the matrix of causal relationships are then multiplied

is proposed in order to observe how the system works. In this example C3 is activated in terms of the 1 (improvement competences are implemented in the system) and the others remain zero. Vector vo and the matrix of causal relationships are then multiplied

![]()

![]()

After a number of iterations, v2, called state vector is obtained; that is the system tends to this state when the cycle is initiated with vector ![]() .

.

In this way the outputs can be interpreted as:

An increase in the individual competence (v0) makes an increase of abilities and an increase of the performance of the procedure, considering that an increase means a suitable procedure and the contrary if there is a decrease. It can be seen that the system converges to a vector with all its concepts been activated. As what has been presented is a simple example, it may be utopist the fact that an increase of competence leads to an increase of itself.

5. Conclusions

Engineering activities from design to decommission are developed by and are embedded in a human context. Decision making is in human hands as well. That is why engineering profession must focus on keeping HF under control during all processes.

HF is habitually left aside from formal models used in safety problems. QA measures are usually implemented to keep their values under certain limits called acceptable.

HF assessment is a complex task. HF is not like a physical magnitude as not standardized procedures nor do fundamental units exist to address it while a high subjective component is present. HF is social, economic, financial and political context dependent. Sometimes, in addition, an important variability is observed with time.

HF not only is complex but incorporates uncertainty to account for when a process has to be assessed as structural failures, based on statistical information, are mainly due to human error.

Taking into account the previous paragraphs, a soundly procedure and a suitable formal tool is necessary to model human complexity and uncertainty. A holistic approach and an alternative logic such as fuzzy logic are proposed in this paper to address HF in civil engineering problems. The monitoring and control procedure presented allows at the same time evaluating the representative values and the reaction capacity of the system when corrective actions have to be taken. This dynamic and continuous HF management pursues to maintain under maximum accepted values the human component of engineering processes. In this way traditional technical approaches can be more complete and improved and consequently decision makers would have more information to guide their actions.

References